This is the manual for Cyberprobe (version 2.5.1, 6 June 2020).

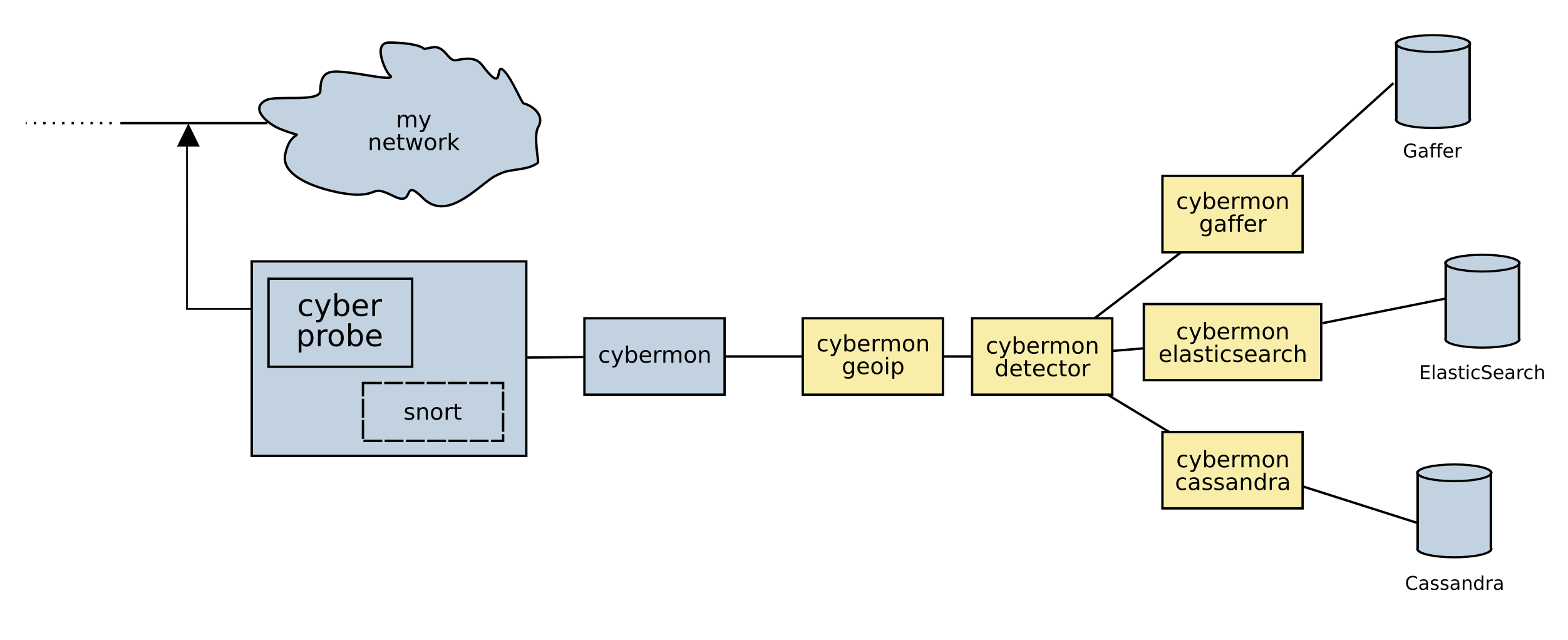

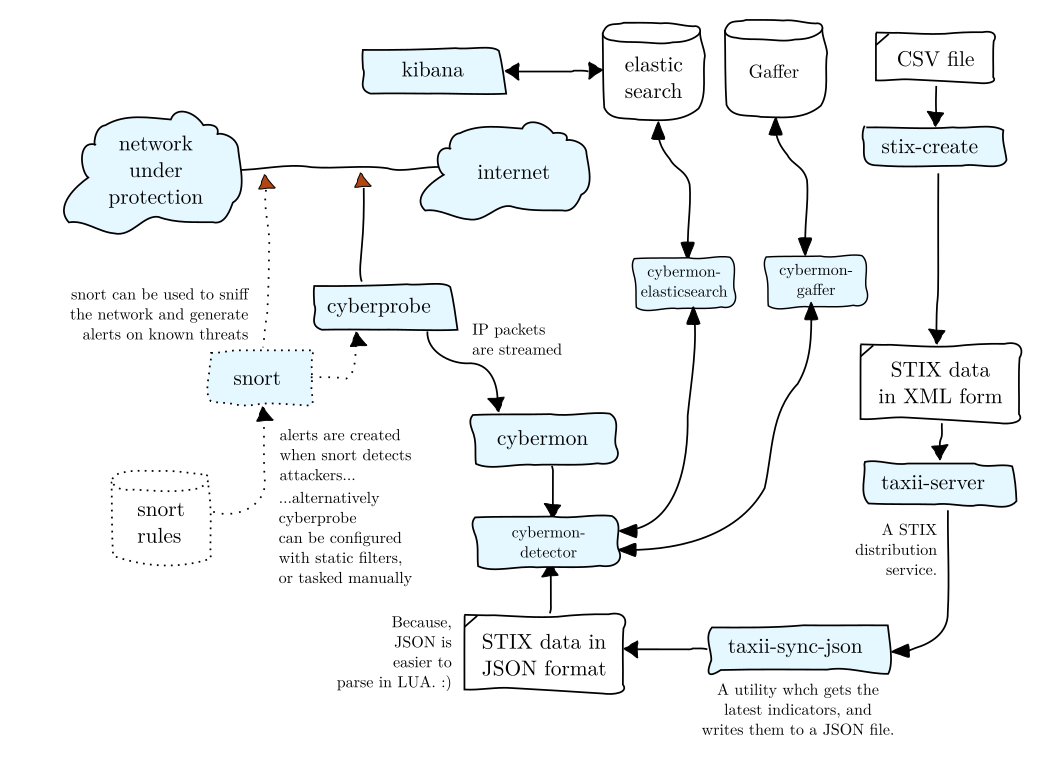

Cyberprobe is a network packet inspection toolkit (Deep Packet Inspection) for real-time monitoring of networks. This has applications in network monitoring, intrusion detection, forensic analysis, and as a defensive platform. Cyberprobe packet inspection works on physical networks, and also in cloud VPCs. There are features that allow cloud-scale deployments.

This is not a single, monolithic intrusion detection toolkit which does everything you want straight out of the box. If that’s what you need, I would suggest you look elsewhere. Instead, Cyberprobe is a set of flexible components which can combined in many ways to manage a wide variety of packet inspection tasks. If you want to build custom network analytics there are many interfaces that make this straightforward.

The project maintains a number of components, including:

cyberprobe, which collects data packets and forwards them a network

stream protocol in real time. Packet collection can be target with IP

addresses, CIDR ranges or full-take. Collected packets are tagged with a

device identifier. cyberprobe can be integrated with Snort to allow

dynamic targeting of IP addresses in response to a Snort rule hitting.

cybermon, which receives collected packet streams, performs stateful

processing and creates a stream of observations describing network events.

The events can be consumed in many different ways e.g. the events can be

delivered to a pub/sub system, or presented to a gRPC service.

The event handling is implemented as a function written in Lua, so you can

add your own custom event handling.

The architecture has support for AWS Traffic Mirroring, and supports cloud-scale deployments.

Next: Obtaining the software, Previous: Top, Up: Top [Index]

Cyberprobe is a network packet inspection toolkit (Deep Packet Inspection) for real-time monitoring of networks. This has applications in network monitoring, intrusion detection, forensic analysis, and as a defensive platform. Cyberprobe packet inspection works on physical networks, and also in cloud VPCs. There are features that allow cloud-scale deployments.

This is not a single, monolithic intrusion detection toolkit which does everything you want straight out of the box. If that’s what you need, I would suggest you look elsewhere. Instead, Cyberprobe is a set of flexible components which can combined in many ways to manage a wide variety of packet inspection tasks. If you want to build custom network analytics there are many interfaces that make this straightforward.

The project maintains a number of components, including:

cyberprobe, which collects data packets and forwards them a network

stream protocol in real time. Packet collection can be target with IP

addresses, CIDR ranges or full-take. Collected packets are tagged with a

device identifier. cyberprobe can be integrated with Snort to allow

dynamic targeting of IP addresses in response to a Snort rule hitting.

cybermon, which receives collected packet streams, performs stateful

processing and creates a stream of observations describing network events.

The events can be consumed in many different ways e.g. the events can be

delivered to a pub/sub system, or presented to a gRPC service.

The event handling is implemented as a function written in Lua, so you can

add your own custom event handling.

The probe, cyberprobe has the following features:

The monitor tool, cybermon has the following features:

cyberprobe instances.

The code is targeted at the Linux platform, although it is generic enough to be applicable to other UN*X-like platforms.

The event stream from cybermon can be presented to a Pulsar

exchange in protobuf form, which can then be delivered to further analytics:

evs-alert reports indicator hits in events to standard output.

evs-cassandra loads events into Cassandra.

evs-detector studies events for the presence of indicators.

Events are annotated with indicator hits of any are observed.

evs-dump dumps raw event JSON to standard output.

evs-elasticsearch loads events into ElasticSearch.

evs-gaffer loads network information into Gaffer (a graph

database).

evs-geoip looks up IP addresses in GeoIP and annotates

events with location information.

evs-monitor outputs event information to standard output.

The architecture has support for AWS Traffic Mirroring, and supports cloud-scale deployments:

cyberprobe instances can load-share across multiple

cybermon instances behind a load-balancer.

cybermon can be delivered to a pub/sub system

to distribute load and permit scale-up.

The easiest way to learn about the software is to follow our Quick Start tutorial.

Cyberprobe release highlights:

2.5Pulsar and protobuf support added, permits events from cybermon to be described in protobuf form, and delivered to a Pulsar pub/sub exchange. Renamed cybermon- subscribers to evs- (Event Stream).

2.4cybermon-detector refactored to use FSM-based evaluation of

boolean expressions.

2.3cyberprobe-cli and control API reworked, protobuf and gRPC support.

Amazon Linux support.

2.2Changed cyberprobe configuration file to use JSON instead of XML. JSON is easier to integrate with automated processes.

2.0.5Added VXLAN support to cybermon. This allows cybermon to receive the VXLAN protocol which is e.g. used by AWS Traffic Mirroring.

2.0New simpler Lua API. Native JSON encoding boosts performance of JSON output by 500%.

1.13Cybermon decodes for: TLS, GRE, ESP.

1.12.3Brought subscribers and docker-compose files up-to-date.

1.11.1Altered handling of ‘origin’ to make it more resilient.

1.11.0Added ‘origin’ field to identify whether or not the triggering device caused an event.

1.10.0Add RabbitMQ / AMQP 0.9.1 support for cybermon and subscribers.

1.8.4Endace DAG package support added.

1.7.0Gaffer subscriber brought up-to-date with Gaffer 1.0 API. GeoIP and IOC processor added to the subscriber model. Some unmaintained Lua code deprecated, as the subscriber model takes care of the functionality.

1.6.8Numerous fixes. UUID generation uses a good seed. ElasticSearch loading fixed, Mac compilation fixed.

1.6.0Changed ETSI sender so that packet streams are multiplexed over multiple TCP streams.

1.5.1Unbounded queue internal to cybermon has a queue limit, to prevent unbounded growth.

1.5.0Timestamp information at the time of packet capture in cyberprobe is now consistently passed through to cybermon and the Lua functions. The Lua API has undergone significant change as a result of passing through timing information.

1.0Lua invocation mechanism has been replaced by a thread-safe queue function.

Next: Quick start tutorial, Previous: Overview, Up: Top [Index]

Deploying containers is by far the easiest way to get the software running. It is possible to deploy a complete software stack for data capture and analysis using Docker containers which requires the minimal amount of software installation. See A containerised processing system.

We use GoCD to build the software, and regularly release packages in DEB and RPM form. Installing from the repository is the easiest way to install if you’re not using containers.

In order to install, you need to add our signing key to your system:

wget -q -O- \

https://cybermaggedon.github.io/cyberprobe-release/cyberprobe.asc | \

apt-key add -

We use this signing key:

pub rsa4096 2020-06-05 [SC]

E684 ECDC B4EA 9F64 DDD0 3D64 3C5A 9A8D 1389 8455

uid [ultimate] Cyber MacGeddon <cybermaggedon@gmail.com>

Once done you then add our repository to /etc/apt/sources.list.

For Debian Stretch, add:

deb https://cybermaggedon.github.io/cyberprobe-release/debian \ stretch main

For Ubuntu Bionic, add:

deb https://cybermaggedon.github.io/cyberprobe-release/ubuntu \ bionic main

For Ubuntu Disco, add:

deb https://cybermaggedon.github.io/cyberprobe-release/ubuntu \ disco main

Once added, the cyberprobe installation proceeds thus:

apt-get update apt-get install cyberprobe

To install using DNF, create file /etc/yum.repos.d/cyberprobe.repo:

[cyberprobe] name=Cyberprobe baseurl=https://cybermaggedon.github.io/cyberprobe-release/fedora/$releasever/$basearch/ gpgcheck=1 enabled=1 gpgkey=https://cybermaggedon.github.io/cyberprobe-release/cyberprobe.asc

and then:

dnf install cyberprobe

We use this signing key:

pub rsa4096 2020-06-05 [SC]

E684 ECDC B4EA 9F64 DDD0 3D64 3C5A 9A8D 1389 8455

uid [ultimate] Cyber MacGeddon <cybermaggedon@gmail.com>

To install using Yum create file /etc/yum.repos.d/cyberprobe.repo:

[cyberprobe] name=Cyberprobe baseurl=https://cybermaggedon.github.io/cyberprobe-release/amazon/$releasever/$basearch/ gpgcheck=1 enabled=1 gpgkey=https://cybermaggedon.github.io/cyberprobe-release/cyberprobe.asc

and then:

yum install cyberprobe

We use this signing key:

pub rsa4096 2020-06-05 [SC]

E684 ECDC B4EA 9F64 DDD0 3D64 3C5A 9A8D 1389 8455

uid [ultimate] Cyber MacGeddon <cybermaggedon@gmail.com>

You can download packages manually; packages are currently available for Fedora, CentOS, Debian and Ubuntu. Downloads are available on the project page at http://github.com/cybermaggedon/cyberprobe/releases.

Fedora packages are installed using dnf:

sudo dnf install <package>

Debian and Ubuntu packages are installed using dpkg:

sudo dpkg -i <package>

If there are dependency errors e.g. because you don’t have some dependencies installed, you can install them thus:

sudo apt-get install -f

Note: on many platforms, installing a package just adds the "run time" part

of the code. In order to be able to compile code against the run time, you

need to install a separate "developers kit" package. On Fedora, for

instance, both libpcap and libpcap-devel are needed in order to

be able to build this code from source.

Note also that lua packages can be a little strange: sometimes the

package will exist in your distribution, at other times you need to install a

utility called luarocks to install the package.

Source downloads are available on the project page at http://github.com/cybermaggedon/cyberprobe/releases, look for the .tar.gz file.

These files can be unwrapped, then configured:

tar xvfz cyberprobe-X.Y.tar.gz cd cyberprobe-X.Y ./configure make sudo make install

README.linux provides some hints for Linux users. If installing on MacOS, read README.mac.

To checkout the latest code using git:

git clone https://github.com/cybermaggedon/cyberprobe

To build, use:

autoreconf -fi ./configure make sudo make install

Powered by Github, project page is at https://cybermaggedon.github.io/cyberprobe-docs/.

There are two Docker repositories containing the Cyberprobe distribution. See http://hub.docker.com/r/cybermaggedon/cyberprobe.

docker.io/cybermaggedon/cyberprobe

docker.io/cybermaggedon/cybermon

The only difference is the default command which is executed on running the container. Here are some container invocations you may find useful:

cyberprobe. You will need to create a configuration file

and map it in to the container.

sudo docker -it --rm -v /etc/cyberprobe:/etc/cyberprobe_host \ docker.io/cybermaggedon/cyberprobe \ cyberprobe /etc/cyberprobe_host/cyberprobe.fg

cybermon. The cybermon container exposes port

9000.

sudo docker -it --rm -p 9000:9000 -v \ --net=host --privileged --cap-add=NET_ADMIN \ docker.io/cybermaggedon/cybermon \ cybermon -p 9000 -c /etc/cyberprobe/amqp-topic.lua

evs-cassandra. You need to know the IP address

of the host side of the Docker bridge network, and provide addresses

of the Cassandra servers.

sudo docker -it --rm -v \ docker.io/cybermaggedon/cybermon \ evs-cassandra cyberprobe \ 10.142.146.6,10.142.146.8

Running cyberprobe in a container makes the deployment easier, but it needs to run with elevated privileges in order to sniff the network, which reduces some of the advantages of running it in a container.

The code doesn’t have many dependencies. Exotic dependencies are:

libpcap.

tcpdump - not needed to build the software, but we use it in the

tutorial.

ncurses, needed for the command line admin utility.

readline, needed for the command line admin utility.

libtaxii and stix are Python modules

made available at http://mitre.org which can be downloaded using

pip.

Next: Running cyberprobe/cybermon at boot time, Previous: Obtaining the software, Up: Top [Index]

| • Preparation | ||

• Using cyberprobe | ||

| • Management interface | ||

• Integration with snort | ||

• Using cybermon | ||

| • Writing your own configuration file | ||

| • Visualisation | ||

| • Threat indicators |

Next: Using cyberprobe, Up: Quick start tutorial [Index]

For installation, see Obtaining the software. There’s a fair amount of development taking place in the git repository, so you probably want to get the a package, or use the latest release on the downloads page (http://github.com/cybermaggedon/cyberprobe/releases).

The compilation process compiles the following commands:

cyberprobePacket capture.

cybermonData analyser, analyses the data streams and reports events.

etsi-rcvrTest decoder for ETSI format data.

cyberprobe-cliCyberprobe control command-line client.

evs-cassandraPub/sub subscriber, delivers events to Cassandra.

evs-elasticsearchPub/sub subscriber, delivers events to ElasticSearch.

evs-gafferPub/sub subscriber, delivers events to Gaffer.

evs-geoipPub/sub subscriber, uses GeoIP to add location information to events, and then republishes them.

evs-detectorPub/sub subscriber, looks for matches for STIX IOCs, adds IOC information to events, and then republishes them.

evs-dumpPub/sub subscriber, dumps out raw JSON messages.

evs-dumpPub/sub subscriber, alerts on matching IOCs.

If it installs / builds without errors, then it’s time to start something up. If you have problems you can’t resolve raise an issue at (https://github.com/cybermaggedon/cyberprobe/issues).

The simplest way to use cyberprobe is to use it on a Linux workstation, or in a virtual machine. Maybe you’re using a Linux desktop now now? If so, you could use it to capture all the data going to/from the internet. This will be a static configuration in order to keep things simple. We’ll do dynamic tracking later.

In the next few steps, you’ll use cyberprobe to capture some data,

on your workstation, and stream it to etsi-rcvr so that you know it’s

working. But first, you’ll need to collect some information about your

configuration.

You need to know the name of the network interface you are using. The

command /sbin/ifconfig will show you all the network interfaces

your machine knows about. e.g.

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

[etc.]

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.80 netmask 255.255.255.0

inet6 fe80::a60:6eff:fe81:7a75 prefixlen 64

[etc.]

The lo interface is a loopback interface, and isn’t really on the network,

so ignore that. It’s an interface that gets packets going to

127.0.0.1 and makes sure they end up handled by your

workstation. Your interface is quite likely to be called something like

eth0. The other thing you need to know is the IP address of your

workstation. The IP address is associated with an interface, so in the above

example, I can see I have an IP address 192.168.1.80.

Note: on some networks (like mine) the IP address is allocated dynamically. In my case, the IP address is allocated by the broadband router. If things aren’t working as you expect, you should check your IP address to check your workstation hasn’t been allocated a new, different address. In my case, I can tell the broadband router to permanently allocate a particular IP address to this workstation, so that it won’t change.

Next: Management interface, Previous: Preparation, Up: Quick start tutorial [Index]

cyberprobeThe source code contains a file config.json which is a good template for any configuration you’re going to build. However, for the purpose of this discussion, let’s start from scratch. In order to do anything useful, there are three essential elements to a cyberprobe configuration file: interfaces, targets and endpoints. The system won’t do anything useful without those three configuration elements defined. Let’s start with a very simple configuration.

Using your favourite text editor, create a text file, say c.cfg with the following contents:

{

"interfaces": [

{ "interface": "eth0" }

]

}

Note: You should replace the eth0 string with the name of your

network interface. Remember? We discovered that when playing with the

ifconfig command.

We’re ready to roll. We need to run as a privileged used because cyberprobe

captures data off the network interface. So, running as root, you need to

locate the place where you compiled the code, and run cyberprobe

giving it the name of the configuration file you just created:

cyberprobe c.cfg

If everything goes to plan, you should see the following output:

Capture on interface eth0 started.

If you see an error message, the obvious two things to check are:

ifconfig

discussion above.

If you see no output at all, check that your configuration file is correct.

Once you are seeing the "Capture on interface eth0" line, then you’ve achieved success in this step, and are ready to move on.

If you have everything working, there’s one thing to note before moving on: cyberprobe treats a broken configuration file the same as an empty configuration file. With cyberprobe running, edit the configuration file, and delete the query (‘?’) prefix in the first line, so that it looks like this:

FIXME {

You’ve now broken the configuration file. It’s not valid JSON any more, so

the parsing fails. You should see this output from cyberprobe:

Capture on interface eth0 stopped.

If you repair the damage to the configuration file, everything will start

working again. The lesson here is: If you find that cyberprobe

won’t recognise any resources, it’s likely that your configuration file is

invalid. Use a JSON parser to check that the file is valid

if you’re not getting the results you expect.

We have cyberprobe running, but it isn’t doing anything

useful. Remember, I said that a useful configuration consists of three

minimal elements: interfaces, targets and endpoints? Well, currently we only

have interfaces defined. That means that cyberprobe is capturing

packets off of the network, but throwing them away.

Let’s add a target. Edit the targets block of the configuration file. We

need an entry describing the IP address of my workstation. Remember? We

discovered that with the ifconfig command earlier? Instead of

192.168.1.80 use the IP address of your workstation.

{

"interfaces": [

{ "interface": "eth0" }

],

"targets": [

{

"address": "192.168.1.80",

"device": "123456"

}

]

}

If successful, you should see new output from cyberprobe:

Added target 192.168.1.80 -> 123456.

The target configuration allows specification of IPv4 and IPv6 addresses, and

addresses can include a mask, which allows IP address matching to be applied

in a wildcard configuration. See cyberprobe configuration

At this step, we’re capturing packets, spotting target addresses, but as there’s no endpoint defined there’s still nowhere to send the data. So, this is still a useless configuration. On to the next step...

Adding an endpoint to the configuration file will define a place where the captured data is sent. Before adding an endpoint, let’s make sure there’s something ready to receive the data.

In a separate terminal window, navigate to the cyberprobe build,

and run:

etsi-rcvr 10000 | tcpdump -n -r -

The etsi-rcvr program opens a TCP port listening on port 10000 for

a stream of ETSI data, and on standard output, writes the IP packets it sees

in PCAP format. The tcpdump command receives this PCAP data, and outputs

packet summaries.

If that starts successfully, the next step is to plumb a connection from

cyberprobe to etsi-rcvr.

Next, edit the configuration file, and edit the endpoints block to deliver packets to a local service on port 10000:

{

"interfaces": [

{ "interface": "eth0" }

],

"targets": [

{

"address": "192.168.1.80",

"device": "123456"

}

],

"endpoints": [

{

"hostname": "localhost",

"port": 10000,

"transport": "tcp",

"type": "etsi"

}

]

}

If that worked, you should see cyberprobe start the endpoint:

Added endpoint localhost:10000 of type etsi

Hopefully you’ll start to see some output from tcpdump...

At this step, cyberprobe should be forwarding an network traffic

your workstation generates to the tcpdump command, so that you see data. Any

average workstation is generating network traffic all the time, so you won’t

need to do anything. But if you see nothing, you can do something like,

visit the Google home page in a browser on your workstation. You should see

something like this pouring from the tcpdump.

18:54:24.376838 IP 192.168.1.80.54249 > 212.58.244.71.http: Flags [P.], seq 1:673, ack 1, win 115, options [nop,nop,TS val 129851063 ecr 33669 55869], length 672 18:54:24.390768 IP 212.58.244.71.http > 192.168.1.80.54249: Flags [.], ack 673, win 124, options [nop,nop,TS val 3366955882 ecr 129851063], le ngth 0 18:54:24.392909 IP 212.58.244.71.http > 192.168.1.80.54249: Flags [P.], seq 1:1796, ack 673, win 124, options [nop,nop,TS val 3366955884 ecr 1 29851063], length 1795

At this step, it’s worth having a quick play with the reconnection

mechanism. Stop and start etsi-rcvr, and you’ll see that

cyberprobe reconnects automatically:

ETSI LI connection to localhost:10000 failed. Will reconnect... ETSI LI connection to localhost:10000 established.

We don’t guarantee zero data loss on a reconnect.

Next: Integration with snort, Previous: Using cyberprobe, Up: Quick start tutorial [Index]

At this step, we’ll setup a control port, and use it modify the

configuration of cyberprobe.

First step is to modify the configuration file to include this line, just

after the <configuration> line:

{

"interfaces": [

{ "interface": "eth0" }

],

"targets": [

{

"address": "192.168.1.80",

"device": "123456"

}

],

"endpoints": [

{

"hostname": "localhost",

"port": 10000,

"transport": "tcp",

"type": "etsi"

}

],

"controls": [

{

"port": 8888,

"username": "admin",

"password": "mypassword"

}

]

}

That declares that a management service needs to be run on port 8888. The

authentication details are provided too. You should see this output from

cyberprobe:

Starting control on port 8888

Good! Now need to connect and interrogate the targets list. The API

is a simple text protocol with requests and responses encoded in JSON.

The cyberprobe-cli command gives you an interactive CLI

interface.

cyberprobe-cli localhost 8888

You are then prompted for a username and password:

$ cyberprobe-cli localhost 8888 Connected. You must authenticate. User: admin Password: ********** >

Press TAB to see command auto-completion.

> show endpoints Hostname Port Type -------- ---- ---- localhost 9000 etsi localhost 9001 etsi localhost 9002 nhis1.1 > add target my-machine4 ipv6 fe80:5551:4024:8196:8175::/40 dark-net > show targets Device Class Address Mask ---- ----- ------- ---- my-machine ipv4 0.0.0.0 /0 my-machine2 ipv4 10.0.0.0 /8 my-machine4 ipv6 fe80:5551:4000:: /40 my-machine3 ipv6 fe80:4124:5696:: /48 > remove target my-machine4 ipv6 fe80:5551:4024:8196:8175::/40 dark-net > add interface vxlan:8124 0.5 not port 9000 > show interfaces Interface Delay Filter --------- ----- ------ vxlan:4789 0.5 not port 10001 and not port 10002 vxlan:4790 0.3 vxlan:8124 0.5 not port 9000

The interface isn’t pretty, but you get the idea. You can change almost everything that you can manage by changing the configuration file.

Note: The the management interface changes the active state of

cyberprobe but it doesn’t change the configuration file. So,

configuration changes made through the management interface are ’lost’ when

you restart cyberprobe.

Note also that you may get some weird results if you use the configuration file AND the control interface to manage the same resources, so you probably don’t want to do that.

See cyberprobe-cli invocation.

Next: Using cybermon, Previous: Management interface, Up: Quick start tutorial [Index]

snortIn this step, we’ll add the excellent IDS, Snort to the mix. If you don’t know Snort, it scans network traffic for patterns, and can take various actions when those patterns are discovered. It is typically used to detect network attacks, and the Snort folks maintain a huge collection of patterns that will identify known network attacks. The Snort team maintain the project at http://www.snort.org.

If you want to try out the Snort integration, you need to head over to the Snort home page, download and install Snort. Or install the appropriate package with your distribution.

Once you have it installed, to simplify things, you’ll want to put a rule in place that will definitely identify things on your network. The easiest way is to add a local rule that identifies your workstation. First of all, you’ll want to make sure your Snort configuration file (probably /etc/snort/snort.conf) loads a local rules file. So, it should contain something like this:

# site specific rules include $RULE_PATH/local.rules

Then, to identify your workstation, add a rule like this to your local rules file (probably /etc/snort/rules/local.rules):

alert tcp 192.168.1.80 any -> any 80 (msg:"Web"; classtype:misc-activity;sid:200; rev:1;)

cyberprobe itself needs to be configured to receive Snort

alerts. You do that by adding a block to the configuration file at the top

level:

{

...

"snort-alerters": [

{

"path": "/var/log/snort/snort_alert",

"duration": 60

}

]

...

}

That says, Snort alerts will result in dynamic collection of data for 60 seconds from identification. While you’re in the configuration file, you can remove the static IP address target line. Make sure the targets block is empty or removed from the configuration:

{

...

"targets": [],

...

}

cyberprobe should respond:

Removed target 192.168.1.80 -> 123456. Start snort alerter on /var/log/snort/snort_alert

Now I can run Snort in IDS mode. Snort needs to run as ’root’:

snort -i eth0 -A unsock -N -l /var/log/snort/ -c /etc/snort/snort.conf

Thanks to our Snort rule, when our workstation generates network data, Snort

will detect it, trigger our rule, and alert cyberprobe. You should

see cyberprobe say:

Hit on signature ID 200, targeting 192.168.1.80

Also, once the rule is triggered, you should see evidence of packet data

from the tcpdump command, as before. cyberprobe causes

the targeting to time out after a period of time. If further alerts are

seen, the targeting lifetime is targeted. If no further alerts are seen the

IP address targeting is deleted. If you can convince your workstation to

stop creating network data, by e.g. not using it for a minute or so, then

you should see the rule time out:

Stopped targeting on 192.168.1.80

In practice this may be harder than you think, as workstations generate network traffic all the time. You may have to turn off your email clients and close the web browse. Your attempt to silence your workstation may be further thwarted by the operating system checking for patches without you knowing.

Your Snort integration suffers from a particular problem now. The time taken

for Snort to inspect some packets, generate an alert and for

cyberprobe to get the IP address targeted is not zero. It is hard

to measure, but it is going to be a significant chunk of a millisecond. The

problem is that by the time cyberprobe is targeting the IP

address, the network attcker’s packets have long gone. The result is, that

while cyberprobe is now targetting the attacker, it won’t capture

the original network attack.

Our solution is to introduce a packet delay in cyberprobe. The

packets entering cyberprobe are kept in a time-delay queue and are

processed once that delay expires. You can configure a delay, by putting the

delay attribute in an interface specfication. e.g.

...

"interfaces": [

{ "interface": "eth0", "delay": 0.2 }

]

...

0.2 second should be plenty enough. You should be able to see this delay in action: When you generate network traffic, you should be able to see the delay between network activity taking place, and the corresponding burst of activity from tcpdump.

At this point, you’ve completed the guided tour of cyberprobe, the

packet capture tool. If that’s all you need, the rest of the tutorial will

probably have less interest to you: In the following steps, we’ll start to

analyse and act on the captured data.

Next: Writing your own configuration file, Previous: Integration with snort, Up: Quick start tutorial [Index]

cybermoncybermonThe previous 9 steps have all been about cyberprobe. If you’ve got

this far successfully, you pretty much know all there is to know about

cyberprobe. It is time to start doing something more useful with

all that data you are capturing. In this step we’ll start up

cybermon and look at the data.

Remember that etsi-rcvr command you started in step Adding an endpoint? Stop that, and start cybermon. Two arguments are

needed: A TCP port number to receive the data on, and a configuration which

tells it what to do. A number of configuration files are bundled in with the

source code, there should be a basic one called monitor.lua which

is now installed in the etc directory, depending on where you installed the

software:

cybermon -p 10000 -c /usr/local/etc/cyberprobe/monitor.lua

Now when you generate network traffic, some of the traffic will be presented

in a reasonably intelligent form. For example, I do a naming service lookup

for www.google.com...

host -t a www.slashdot.org

The DNS protocol is parsed, and presented in a human readable form. I can see the request, and the response:

SNORTc0a80150: 192.168.1.80:54633 -> 192.168.1.1:53. DNS query

Query: www.slashdot.org

SNORTc0a80150: 192.168.1.1:53 -> 192.168.1.80:54633. DNS response

Query: www.slashdot.org

Answer: www.slashdot.org -> 216.34.181.48

I see the query travelling from my workstation to the broadband router, and then the response from the broadband router contains an answer field mapping the name to an address. HTTP protocols are also decoded. Get the Slashdot home page...

wget -O- 'http://www.slashdot.org/'

...and amongst all the other stuff, you see the HTTP request and response...

SNORTc0a80150: 192.168.1.80:34284 -> 216.34.181.45:80. HTTP GET request

URL /

Connection: Keep-Alive

User-Agent: Wget/1.14 (linux-gnu)

Host: slashdot.org

Accept: */*

SNORTc0a80150: 216.34.181.45:80 -> 192.168.1.80:34284. HTTP response 200

OK

URL http://slashdot.org/

Connection: keep-alive

Content-Length: 113468

Date: Mon, 26 Aug 2013 13:13:25 GMT

Age: 17

X-Varnish: 1493567531 1493567417

X-XRDS-Location: http://slashdot.org/slashdot.xrds

Cache-Control: no-cache

Vary: Accept-Encoding

SLASH_LOG_DATA: shtml

Pragma: no-cache

Content-Type: text/html; charset=utf-8

Server: Apache/2.2.3 (CentOS)

In the previous step, you started cybermon with the

monitor.lua

configuration file.

Configuration file json.lua causes cybermon to output the

events as JSON objects.

cybermon -p 10000 -c /usr/local/etc/cyberprobe/json.lua

The quiet.lua configuration file does nothing. It may be a good place to start hacking your own configuration file. Which is exactly what we’ll do in the next step.

Next: Visualisation, Previous: Using cybermon, Up: Quick start tutorial [Index]

Now, take a copy of the quiet.lua configuration file, and have a look at it. It is a Lua module which defines a minimum of one function, ‘event’, which is called when events occur. LUA is a lightweight scripting langauge which is really good as a configuration language. This function is called when a TCP connection is made:

observer.event = function(e) end

Let’s get hacking! The header parameter is a LUA table which contains key/value pairs from the header. The url parameter contains the full URL of the response. The body parameter contains the payload body as an empty string. Let’s start simple:

observer.event = function(e)

if e.action == "http_response" then

print(url)

end

end

Then run that up...

cybermon -p 10000 -c my.lua

Now, do some web browsing, and you should see a list of URLs flying past. Each web page typically consists of several HTTP requests, but you should be able to see the URLs associated with all of the web pages you visit. Let’s tart that up a little more:

-- This function is called when an HTTP response is observed.

observer.event = function(e)

if e.action == "http_response" then

-- Take first 40 characters of URL

local u = url:sub(1,40)

-- Get Content-Type (first 20 characters)

local ct

ct = ""

for key, value in pairs(header) do

if key:lower() == "content-type" then

ct = value:sub(1,20)

end

end

io.write(string.format("%-40s %-20s %d\n", u, ct, #body))

end

end

That basically outputs three columns: The URL (truncated to 40 characters), the body content type (truncated to 20 characters) and the HTTP response payload length. Here’s what I get from visiting Slashdot:

http://widget-cdn.rpxnow.com/manifest/sh text/javascript;char 42980 http://slashdot.org/ text/html; charset=u 40105 http://ad.doubleclick.net/adj/ostg.slash text/javascript; cha 5625 http://pagead2.googlesyndication.com/pag application/x-shockw 33347 http://ad.doubleclick.net/adj/ostg.slash text/javascript; cha 540 http://ad.doubleclick.net/adj/ostg.slash text/javascript; cha 42 http://ad.doubleclick.net/adj/ostg.slash text/javascript; cha 452 http://pagead2.googlesyndication.com/pag 0

So far, this has just been monitoring. It’s time to add data to the network! From the LUA functions, there are a couple of functions available which allow you to put some packets back onto the network.

But first... there’s a problem. You remember in step 9, we added a delay? That’s not going to work with packet forgery, because by the time we’ve forged a packet and sent it on to the network, it’s too late. So, we need to change our interface back so that there’s no delay on the interface. That means, we’re monitoring network data, but we’ll miss the original attack which triggered a Snort alert.

...

"interfaces": [

{ "interface": "eth0", "delay": 0.0 }

],

...

Once you have this code working, you might be able to mess with the delay parameter to see if you can pick a low-latency value that works for you. On my network, the value 0.02 is low enough to allow a response to allow packet forgery to work. Any higher, and the forged packets are too late to beat the real packets.

The LUA interface passes a context variable to many of the LUA functions,

which gives access to cybermon information and the packet forgery

functions. In this step, we’re going to forge a TCP reset on any connections

which are from or to port 80. Hack the configuration file:

observer.event = function(e)

if e.action == "connected_up" then

-- Get TCP ports.

local cls, src_addr, dest_addr

cls, src_addr = e.context:get_src_addr()

cls, dest_addr = e.context:get_dest_addr()

-- check if it is port 80.

if not((src_addr == "80") or (dest_addr == "80")) then

-- Ignore non-HTTP traffic

return

end

-- TCP reset

print("Reset on HTTP connection.")

e.context:forge_tcp_reset(context)

end

end

Now before we go any further, cybermon needs to run as root in

order to use either of the packet forgery functions. Packet forgery needs

access to the raw IP socket layer, which is a privileged operation. Start

that up:

sudo cybermon -p 10000 -c my.lua

Now start web browsing, and you should see a bunch of "Reset on HTTP connection" messages. Also, you’ll see a page saying "The connection was reset" in your web browser. That’s a fairly anti-social configuration to run on any network. See the forge-reset.lua example for a more useful configuration. It disrupts network traffic going to/from an SSH server which isn’t from your administration workstation.

On any network with an SSH service open to the outside world, you might want

to use firewall rules to prevent access to the SSH service from addresses

outside of your network, but you could use cybermon as a

belt-and-braces protection mechanism.

Another example is where you know the user community on your network is being targeted by phishing emails. Your first step is to try to get the phishing emails out of their inboxes, getting your email provider to filter the attacks. But a backup attack would be to make sure your users can’t get to the phisher’s web site. The event function allows us to reset requests going to a particular web site.

observer.event = function (e)

if e.action == "http_request" then

if e.header["Host"] == "example.org" then

print("Reset on HTTP request")

context:forge_tcp_reset(context)

end

if e.header["Host"] == "www.example.org" then

print("Reset on HTTP request")

context:forge_tcp_reset(context)

end

end

end

In this step, we’ll detect a particular DNS request, and forge a response. First of all, you’ll need to familiarise yourself with host which is a useful DNS test tool. e.g.

$ host -t a example.org example.org has address 93.184.216.119

The example.org name has been resolved to a particular IP

address. Let’s hack the DNS request function in my.lua:

-- This function is called when a DNS message is observed.

local observer = {}

observer.event = function(e)

if e.action ~= "dns_message" then

return

end

if e.header.qr == 0 and #e.queries == 1 and e.queries[1].name == "example.org"

and e.queries[1].type == 1 and e.queries[1].class == 1 then

-- Send a fake response

-- Set query/response flag to 'response'

header = e.header

header.qr = 1

header.ancount = 2

-- Two answers, give example.org 2 alternative IP addresses.

answers = {}

answers[1] = {}

answers[1].name = "example.org"

answers[1].type = 1

answers[1].class = 1

answers[1].rdaddress = "1.2.3.4"

answers[2] = {}

answers[2].name = "example.org"

answers[2].type = 1

answers[2].class = 1

answers[2].rdaddress = "5.6.7.8"

-- Two answers

e.header.ancount = 2

io.write("Forging DNS response!\n")

e.context:forge_dns_response(header, e.queries, answers, {}, {})

end

end

-- Return the table

return observer

So, this example, checks that the query is one we want to mess with. If it

is, we turn the query structures into response structures, and hand them

back to cybermon to do a forgery. The above example forges the

address 1.2.3.4. Start up cybermon with the script:

cybermon -p 10000 -c my.lua

If everything is working your host command will show a different result:

$ host -t a example.org example.org has address 1.2.3.4

DNS forgery has applications in blocking access to a phishers resources on the internet, you might want to redirect your users to an address which is inside your network.

The cybermon configuration documentation details the LUA

interface in detail if

you want to find out what else you can do in your LUA code.

Next: Threat indicators, Previous: Writing your own configuration file, Up: Quick start tutorial [Index]

Now we need somewhere to store the observations which cybermon

discovers. There are many candidates for a storage repository, but my

favourite for this sort of scenario is the excellent ElasticSearch (

http://www.elasticsearch.org/). It is flexible, offers a huge amount

of functionality, and is incredibly simple to interface with, thanks to its

JSON API. So, your next action is to head over to the download page

(http://www.elasticsearch.org/download/) and get hold of the latest

version. I’m using version 7.0 to build this tutorial but the

ElasticSearch API has proven hugely stable, so should work with the latest.

The easiest way to run ElasticSearch is as a Docker container, although you could download and run the distribution.

docker run --name elasticsearch -p 9200:9200 \ elasticsearch:7.0

One brilliant thing about ElasticSearch is that it needs almost no

configuration to get an instance started. You will need to make one

configuration change to ElasticSearch if there are other instances running

on your network: you need need to change cluster.name to some unique

string in config/elasticsearch.yml, otherwise your ElasticSearch

instance might join another cluster on your network, which could complicate

things.

You can check you have ElasticSearch up and running using a command such as this:

wget -q -O- http://localhost:9200

The response will look something like this:

{

"name" : "gAbVXGZ",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "TPZLBGYnTNqe0-LVLiF6yw",

"version" : {

"number" : "7.0.0",

"build_hash" : "bd92e7f",

"build_date" : "2017-12-17T20:23:25.338Z",

"build_snapshot" : false,

"lucene_version" : "7.1.0",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

Once ElasticSearch is running, you can get cybermon to load

observations into it. Before we do that, need Pulsar to provide the

pub/sub infrastructure:

docker run -it \

--name pubsub \

-p 6650:6650 \

-p 8080:8080 \

apachepulsar/pulsar:2.5.2 \

bin/pulsar standalone

Next we need to run two commands.

Firstly, cybermon is run to output events on a Pulsar

pub/sub exchange.

cybermon -p 10000 -c /usr/local/etc/cyberprobe/pulsar.lua

While that’s running, we can start the ElasticSearch loader:

evs-elasticsearch cyberprobe

After some network data has been observed, you should be able to see results loaded into ElasticSearch using the following command:

es=localhost:9200

curl -s -XPOST \

"http://$es/cyberprobe/_search?pretty=true" -d '

{

"query" : {

"match_all": {}

}

}

'

You should see some stuff which looks like data scrolling past on the

screen. If your response looks like the following result, that’s not so

good, as it means there are no results. See hits.total? Zero means no

results.

{

"took" : 1,

"timed_out" : false,

"_shards" : {

"total" : 5,

"successful" : 5,

"failed" : 0

},

"hits" : {

"total" : 0,

"max_score" : null,

"hits" : [ ]

}

}

If you see a lot of information scrolling past on the screen, that’s good.

evs-elasticsearch maps the cybermon

observations into a form which is appropriate to store in

ElasticSearch.

Having loaded the observations into ElasticSearch, it’s easy to do some visualisation with Kibana. Kibana is a brilliant, user-configurable dashboard package designed to sit on ElasticSearch. The dashboard runs in your browser.

First thing to do is to run up a Kibana container. Kibana is made by the ElasticSearch people, download page is at http://www.elasticsearch.co/downloads/kibana.

Run a Kibana container:

docker run --name kibana \ -e ELASTICSEARCH_URL=http://elasticsearch:9200/ -p 5601:5601 \ --link elasticsearch:elasticsearch \ kibana:7.0

Kibana starts on port 5601, so point your browser at e.g. http://localhost:5601

and hopefully you see Kibana’s "Welcome to Kibana" screen.

Read the Kibana tutorial and start playing with the data.

First thing you need to do is

create a cyberprobe index with the time field time.

The go to the Visualize tab to see raw data.

Once you have data loading into ElasticSearch, you may want to install our basic dashboards. These are installed at:

/usr/local/share/doc/cyberprobe/kibana-dashboards.json

Previous: Visualisation, Up: Quick start tutorial [Index]

Cyberprobe includes a subscriber which apply indicators to events. When an event matches an indicator, information about the indicator is tagged into the event.

Indicator support is present in the evs-detector subscriber

which reads indicators from a JSON file.

The installation bundle includes a a file containing some sample indicators, search for indicators.json, which may be installed at /usr/local/share/doc/cyberprobe.

cybermonTo run using your existing processing pipeline, stop any running

cybermon and evs-elasticsearch processes. Then run

cybermon to publish to a topic on Pulsar:

cybermon -p 10000 -c /usr/local/etc/cyberprobe/pulsar.lua

Next run cyberprobe-detector to apply indicator rules. By default,

this will subscribe to ‘cyberprobe’ and publish to ‘ioc’:

env INDICATORS=/path/to/indicators.json evs-detector \

cyberprobe ioc

Finally, in order to look at the output, we need to subscribe to ‘ioc’:

evs-dump ioc

If you have jq installed, this will make it easier to see

when indicators hit:

evs-dump ioc | jq --unbuffered .indicators

This activity should trigger a theat:

wget -q -O- http://www.malware.org/malware.dat

If this works, you should see the following output:

[

{

"description": "URL of a page serving malware",

"category": "malware",

"author": "someone@example.com",

"source": "id:3245edd9-e0f3-4982-9406-fbf93b874555",

"type": "url",

"value": "http://malware.org/malware.dat"

}

]

This hits on a number of theat indicators. The hostname www.malware.com is

present in a theat indicator, and it is detected in the HTTP request, and

both the DNS query and response. Also, the URL

http://www.malware.org/malware.dat is in a threat indicator and it is

detected in both the HTTP request and response.

evs-detector updates its state if

the JSON configuration file has changed.

If you want to load the output of evs-detector into

ElasticSearch, you can, but you need to subscribe to ‘ioc’:

evs-elasticsearch ioc

This results in indicator hit information being loaded into ES.

For more information on indicators, see

evs-detector invocation and

Cyberprobe indicator format.

See https://github.com/cybermaggedon/threat-exchanges for code which creates indicator sets from threat exchanges.

All done, I hope you enjoyed the tutorial! Any comments on the software, or tutorial itself are very welcome! Positive, or negative, we want to hear how you found the experience.

Next: The pub/sub infrastructure, Previous: Quick start tutorial, Up: Top [Index]

The cyberprobe and cybermon utilities are used as a pair

to analyse network data. The cyberprobe component is used to

capture data and forward to cybermon. When running on a network,

you can decide to run several cyberprobe deployments into a single

cybermon. Or run a cybermon process everywhere you

run a cyberprobe.

Once you have decided your checklist, your setup checklist for using

cyberprobe and cybermon consists of:

cyberprobe, provide the appropriate

configuration

in file

/usr/local/etc/cyberprobe.cfg. The standard installation

will install a template at this location.

See cyberprobe configuration on managing this configuration

file.

Make sure that the configuration file includes the delivery address of the

appropriate cybermon.

cybermon, provide the

appropriate configuration in file

/usr/local/etc/cyberprobe/cybermon.lua.

The standard installation

does not create a file at this location, and you should create one. You can

copy an example from the /usr/local/etc/cyberprobe directory.

Use /usr/local/etc/cyberprobe/amqp-topic.lua if you want to use

pub/sub delivery. See cybermon configuration for more

information on constructing the configuration file.

See cybermon example configurations for descriptions of the

example configuration files.

systemd configuration, and

you can enable boot-time starting of cyberprobe or

cybermon by using either or both of these commands:

systemctl enable cyberprobe systemctl enable cybermon

Once enabled, you can reboot, or immediately start the processes using either or both of these commands:

systemctl start cyberprobe systemctl start cybermon

Next: A containerised processing system, Previous: Running cyberprobe/cybermon at boot time, Up: Top [Index]

| • Pub/sub overview | ||

| • The Cassandra subscriber | ||

| • The ElasticSearch subscriber | ||

| • The Gaffer subscriber | ||

| • The debug monitor subscriber |

Next: The Cassandra subscriber, Up: The pub/sub infrastructure [Index]

Events from cybermon can be delivered to a pub/sub mechanism which

allows subscribers to connect and disconnect without disrupting delivery

to other subscribers. The pub/sub mechanism used is Pulsar, which is a

simple high-performance, distributed exchange.

In order to use this mechanism, you need to ensure you have configured

cybermon appropriately. This is normally done by using the

pulsar.lua configuration file. Copy to cybermon.lua in directory

/usr/local/etc/cyberprobe/ to use this by default with standard

system startup of cybermon.

Alternatively, cybermon can be manually

invoked, specifying the pulsar.lua pathname on the command line.

Once running, cybermon will publish all events

to Pulsar’s ‘cyberprobe’ topic.

Pulsar allows subscribers to be started and stopped without affecting the

delivery of events to other receivers. That is, you can start

cybermon with no subscribers, discarding data, and introduce

subscribers later.

For more advanced processing scenarios, multiple pub/sub components can be chained. e.g.

cybermon can be executed with pulsar to publish

events to Pulsar topic ‘cyberprobe’.

evs-geoip can subscribe to ‘cyberprobe’, and push

events containing information to ‘geo’.

evs-detector can do lookup for IOCs and push events with IOC

detection information to ‘ioc’.

evs-elasticsearch can subscribe to ‘ioc’ and write events to

ElasticSearch.

Next: The ElasticSearch subscriber, Previous: Pub/sub overview, Up: The pub/sub infrastructure [Index]

Note: The Cassandra subscriber doesn’t do much useful. I recommend skipping this bit.

This subscriber writes data to a Cassandra store in a schema useful for graph analysis.

The schema is experimental, but see https://github.com/cybermaggedon/cassandra-redland for the tooling I’m using.

On the command-line you need to tell the subscriber the location of the Cassandra contact points e.g.

evs-cassandra ioc cas1,cas2,cas3

Next: The Gaffer subscriber, Previous: The Cassandra subscriber, Up: The pub/sub infrastructure [Index]

This suscriber extracts events from pub/sub and formats them for delivery to ElasticSearch. The only piece of information you need is the ElasticSearch base URI, which is used as a command-line parameter e.g.

evs-elasticsearch ioc http://es-host1:9200

See evs-elasticsearch invocation.

Next: The debug monitor subscriber, Previous: The ElasticSearch subscriber, Up: The pub/sub infrastructure [Index]

Gaffer is a graph database built on top of Accumulo, Zookeeper and Hadoop. This subscriber writes IP, TCP and UDP communication information into the graph. If you want to use this, get familiar with Gaffer. Gaffer development is hosted on Github at https://github.com/gchq/Gaffer, and I maintain Gaffer containers here:

Gaffer component, provides REST interface running in a Wildfly container.

Accumulo component, with added Gaffer operator library which is necessary to be able to use Gaffer on Accumulo.

Zookeeper container, which is required by Accumulo.

Hadoop container, which is required by Accumulo.

To get started, you can run a Gaffer system by launching with the minimal set of containers:

GAFFER_VERSION=1.1.2

# Run Hadoop

docker run -d --name hadoop cybermaggedon/hadoop:2.8.1

# Run Zookeeper

docker run -d --name zookeeper \

cybermaggedon/zookeeper:3.4.10b

# Run Accumulo

docker run -d --name accumulo --link zookeeper:zookeeper \

--link hadoop:hadoop \

cybermaggedon/accumulo-gaffer:${GAFFER_VERSION}

# Run Wildfly, exposing port 8080.

docker run -d --name wildfly --link zookeeper:zookeeper \

--link hadoop:hadoop --link accumulo:accumulo \

-p 8080:8080 \

cybermaggedon/wildfly-gaffer:${GAFFER_VERSION}

The Gaffer/Wildfly component takes about 30 seconds to bed in. Once working, you can check the status of Gaffer by interacting with the REST API. This command should return the Graph schema, which is a JSON object:

wget -q -O- http://localhost:8080/rest/v1/graph/schema

You can fetch the entire graph using this command. Initially, the graph will be empty. This command may take a long while to run once the graph is loaded with loads of data:

wget -q -O- --header 'Content-Type: application/json' \

--post-data '

{"class": "uk.gov.gchq.gaffer.operation.impl.get.GetAllElements"}

' http://localhost:8080/rest/v2/graph/operations/execute

cybermonOn the command-line you need to tell the subscriber the location of the Gaffer REST API. e.g.

evs-gaffer ioc \

http://localhost:8080/rest/v1

Previous: The Gaffer subscriber, Up: The pub/sub infrastructure [Index]

The evs-monitor subscriber is a subscriber which takes

events and writes human-readable output on standard output. This is a

useful means to verify that cyberprobe, cybermon and

pub/sub are configured correctly.

Next: Integrating with AWS Traffic Mirroring, Previous: The pub/sub infrastructure, Up: Top [Index]

The cybermon, subscriber components and data stores can easily be

deployed in containers to form a scalable processing system.

To illustrate this in use, we distrubute a Docker Compose configuration which can be used to start:

cybermon, listening on port 9000.

evs-geoip container, adding GeoIP information to events.

evs-detector container, adding IOC information to events

from a sample STIX data set.

evs-elasticsearch container, to load information into

ElasticSearch.

evs-gaffer container, to load information into Gaffer.

elasticsearch container to store events.

kibana container to store events.

You can see the Docker Compose configuration at the path:

/usr/local/share/doc/cyberprobe/docker-compose.yml

In order to invoke this run:

cd /usr/local/share/doc/cyberprobe/ docker-compose up

No data is stored persistently - you can change how this works by changing the docker-compose.yml file. It takes about a minute to settle down, at which point, you need to generate data using cyberprobe and send to port 9000.

You can connect to the Kibana instance on port 5601. The first thing you will need to do is to go to the Management > Index Patterns dialogue, and create an index pattern for index ‘cyberprobe’, with time specified in the ‘time’ field.

You may want to install our data dashboards, using Management > Saved Objects and press the Import button. The dashboard file is installed at:

/usr/local/share/doc/cyberprobe/kibana-dashboards.json

ElasticSearch bails out unless ‘vm.max_map_count’ setting is right. If you have problems, try:

sudo sysctl vm.max_map_count=512000

There is a second configuration which adds Snort and Cyberprobe to the

deployment. This accesses the host network interface by providing

host network access to the cyberprobe and snort containers.

The network interface name is specified in the cyberprobe.cfg

file for cyberprobe and the docker-compose-cp-snort.yml

file for snort so you will need to edit accordingly.

cd /usr/local/share/doc/cyberprobe/ docker-compose \ -f /usr/local/share/doc/cyberprobe/docker-compose-cp-snort.yml up

The configuration results in trigger packet acquisition as soon as any port 80 or port 11111 data is observed. e.g.

wget -q -O- http://www.example.org/

Next: Endace DAG, Previous: A containerised processing system, Up: Top [Index]

AWS allows you to mirror network traversing a network interface (ENI) to another ENI, essentially providing the equivalent of an Ethernet tap in a data centre. Traffic Mirroring allows you to select network interfaces or NLBs for traffic mirroring. Filters can be applied to select the subset of traffic which will be mirrored, and a target interface allows you to specify an ENI or load-balancer to receive the data. Data is delivered in VXLAN format, essentially, Ethernet frames wrapped in an 8-byte header. These frames are delivered over UDP to port 4789.

Cyberprobe provides two ways to integrate with AWS Traffic Mirroring:

cyberprobe implements VXLAN reception. If an interface is defined

whose

name has a vxlan:PORT form, then a VXLAN receiver is started on the

specified UDP port number. For AWS traffic mirroring, delivery is on port

4789, so the interface name vxlan:4789 would be used.

cybermon implements VXLAN reception with the -V option

allowing a port to be specified. Events produced form a VXLAN feed have

the device ID set to the string ‘VNI’ plus the VNI identifier in

decimal.

Using VXLAN support in cyberprobe provides more flexibility, as

this allows delay line and filter commands to be used. Also, IP addresses

can be mapped to device IDs.

Using VXLAN support in cybermon means that cyberprobe

does not need to be used, and provides for a simpler architecture.

Next: Reference, Previous: Integrating with AWS Traffic Mirroring, Up: Top [Index]

cyberprobe includes support for Endace DAG. This is presently

not distributed. If you compile cyberprobe on a host which has

the DAG library (libdag) installed, it will be detected at the

configure step.

If DAG support is compiled in, then the DAG devices can be referenced in the cyberprobe.cfg file using the prefix ‘dag’ plus the card number e.g.

...

"interfaces": [

{ "interface": "dag0" }

]

...

To use DAG devices, you need to load DAG firmware, and set all

appropriate card options using dagload and dagconfig

prior to starting cyberprobe.

Next: Architecture, Previous: Endace DAG, Up: Top [Index]

Next: cyberprobe configuration, Up: Reference [Index]

cyberprobe invocationcyberprobe is a network monitor which collects packets which match an

IP address list. The packets collected are streamed using network streaming

protocols. The IP address match list can be statically conqfigured (in a

configuration file), can be dynamically changed using a management

interface, or can be dynamically changed as a result of snort alerts.

Synopsis:

cyberprobe configuration-file

cyberprobe configuration.

cyberprobe executes indefinitely - to end the program, a signal should

be sent. e.g.

killall cyberprobe

Next: cyberprobe-cli invocation, Previous: cyberprobe invocation, Up: Reference [Index]

cyberprobe configurationThe configuration file is re-read when it changes, and changes are immediately actioned.

Sample configuration:

{

"interfaces": [

{

"interface": "eth0",

"filter": "not port 10001 and not port 10002",

"delay": 0.5

},

{

"interface": "eth1"

}

],

"targets": [

{ "address": "192.168.1.1", "device": "sam-iphone" },

{ "address": "192.168.1.2", "device": "fred-android" },

{ "address": "10.2.0.0/16", "device": "lisa-mac" },

{ "address": "10.1.1.0", "device": "suzie-mac" },

{ "address": "10.1.1.2", "device": "suzie-android" },

{ "address": "10.1.1.3", "device": "suzie-iphone" },

{ "address": "10.1.1.4", "device": "imogen-pc" },

{ "address": "10.1.1.5", "device": "imogen-pc" },

{ "address": "10.1.1.6", "device": "imogen-pc" },

{ "address": "10.1.1.7", "device": "imogen-ipad" },

{ "address": "10.1.1.8", "device": "imogen-android" },

{ "address": "10.1.1.9", "device": "imogen-android" },

{ "address": "10.1.1.10", "device": "will-tablet" },

{ "address": "aaaa:bbbb:cccc:dddd::4:5:6", "class": "ipv6",

"device": "sam-pc" },

{ "address": "aaaa:bbbb:cccc::/48", class: "ipv6",

"device": "sam-pc" }

],

"endpoints": [

{

"hostname": "monitor1",

"port": 10001,

"type": "nhis1.1"

},

{

"hostname": "monitor2",

"port": 10002,

"type": "etsi"

}

],

"parameters": {

"country": "DE",

"operator": "Cyber",

"network_element": "10.8.2.4",

"interception_point": "abcd1234",

"username.123456": "user01@example.org",

"username.123981": "user02@example.org",

"username.981235": "user03@example.org",

"snort.1.liid": "SNORT1",

"snort.2.liid": "SNORT2"

},

"snort-alerters": [

{

"duration": 30,

"path": "/tmp/alert"

}

]

}

The control element is optional, if it exists, cyberprobe runs

a management interface on the specified port. The port,

username and password attributes must be specified. See

Management interface for how to communicate with that interface.

The interfaces block defines a set of interfaces to sniff. The

name attribute is mandatory, the filter element is optional,

and if specified should describe a BPF (Berkley Packet Filter)

expression. The delay element can be used to specify, in seconds, the

duration to wait before packets are processed. The delay is specified as a

floating point decimal. If the interface name is of the form

vxlan:PORT then a VXLAN receiver is run in the specified port

number for reception of e.g. AWS Traffic Mirroring.

The targets block defines IP address to match. The

address attribute defines the IP address with optional mask used for

the address match. If a mask is specified, this describes the subset of the

address which will be used for matching. For instance, if

192.168.0.0/16 is specified, then a 16-bit mask will be applied, which

makes this a class-B address match. That is, any address in the

192.168.0.0-192.168.255.255 range will match.

If no mask is specified, then this is an exact match against a single address.

The device attribute defines the deivce ID which will be applied

if this

particular IP address is detected.

The optional network attribute

defines the network (ETSI NetworkElementID), which, if specified,

will be transmitted in the ETSI stream, and delivered as the JSON

‘network’ element in cybermon output.

The address must be an IP address, and

not a hostname. The address can be an IPv6 address if the class

attribute is included, and set to ipv6.

Device IDs can occur in multiple places in the target block, allowing multiple IP addresses to match to the same device ID, but the same IP address/mask specifier should only occur once in the target block.

If subnetwork ranges overlap, the longest prefix match applies.

The device and network can contain template constructs:

This is replaced with the IP address which causes a match.

This is replaced with the IP address in the target rule - useful if this is a subnetwork address.

This is replaced with the source MAC address in the header of the packet which causes a match.

This is replaced with the VLAN ID in the header of the packet which causes a match.

This is replaced with a literal %.

The endpoints block defines a set of addresses for delivery. The

hostname and port attributes should be used to describe the

endpoint address. Type type attribute should be nhis1.1 or

etsi to specify which output stream format to use. The transport

describe the transport type, which should be tcp for standard TCP stream,

or tls for an SSL/TLS stream. If TLS is invoked, the attributes

certificate, key and trusted-ca should be specified,

with filenames for client certificate, private key, and a trust CA chain.

These should all be in PEM format.

The optional parameters block defines a set of parameters which are

only used in ETSI delivery. Each parameter element should have a key

and a value attribute. The parameter values for country,

operator, network_element and interception_point

describe values which are used in the PSHeader and IRI

constructs. The parameters with prefix username. describe values for

the username values in the IPIRI construct in ETSI LI. The key

value is the literal username. suffixed with the device ID. If such an

entry is present, it is used for the username. All parameters are

optional, meaningless defaults (e.g. unknown) will be used if not specified.

The etsi-streams parameter specifies the number of TCP streams which

will be opened for delivery, the default being 12. This feature potentially

increases throughput, and is useful if the destination is a load-balanced

resource.

Next: cyberprobe-cli commands, Previous: cyberprobe configuration, Up: Reference [Index]

cyberprobe-cli invocationcyberprobe-cli connects to cyberprobe on the

management port to allow dynamic administration. This permits

dynamic management of resources.

Note: You can end up in a confusing situation if you use both the configuration file, and the management interface to configure resources. It is best to use one or the other. You can safely use the configuration file for resources that you don’t intend to change through the management interface, but you shouldn’t use both the configuration file and management interface to change the same resources.

Synopsis:

cyberprobe-cli HOST PORT

Example:

cyberprobe-cli vpn-host031 8888

Specifies the hostname or IP address of the host to connect to.

Specifies the management port number.

Upon connection, you are prompted to enter a username and password. Upon successful authentication, you are then offered a command line prompt for administration commands.

Next: eventstream-service invocation, Previous: cyberprobe-cli invocation, Up: Reference [Index]

cyberprobe-cli commandsThe following commands are supported by cyberprobe-cli:

Adds a delivery endpoint.

Specifies the delivery host.

Specifies TCP port to deliver to.

Can be one of ‘nhis’ or ‘etsi’ for delivery protocol.

Can be one of ‘tcp’ or ‘tls’ for TCP or TLS transports. Defaults to ‘tcp’.

Filename of a key file in PEM format if ‘tls’ is specified.

Filename of an X.509 file in PEM format if ‘tls’ is specified.

Filename of trusted CA certs in PEM format if ‘tls’ is specified.

Note: It is not possible to specify the appropriate transport paramters for TLS delivery using the management interface currently.

Adds an interface for packet sniffing.

Interface name.

Delay between packet acquisiton and delivery. Defaults to zero.

Optional, species a filter to be applied for positive selection of packets, in BPF / libpcap format.

Adds a parameter.

Parameter key.

Parameter value.

Adds an address target for packet capture.

Device identifier.

Address protocol, one of ‘ipv4’ or ‘ipv6’.

Address value, in IPv4 or IPv6 format, according to the PROTOCOL value. Optionally can have a ‘/mask’ suffic.

Causes the client to close the connection and terminate.

Removes an endpoint added through the ‘add endpoint’ command. The HOST, PORT TYPE and TRANSPORT, ... values are the same as for ‘add endpoint’.

Removes an interface added through the ‘add interface’ command. The INTERFACE, DELAY and FILTER values are the same as for ‘add interface’.

Removes a paramter added through the ‘add parameter’ command. The KEY and VALUE values are the same as for ‘remove parameter’.

Removes a target added through the ‘remove target’ command. The PROTOCOL and ADDRESS values are the same as for ‘add target’.

Displays a table showing endpoints.

Displays a table showing interfaces.

Displays a table showing parameters.

Displays a table showing targets.

Next: Output streaming protocols, Previous: cyberprobe-cli commands, Up: Reference [Index]

eventstream-service invocationeventstream-service is a demonstrator gRPC service for

cyberprobe gRPC. It isn’t particularly useful for anything other than

demo/debugging/diagnosing gRPC problems.

It receives gRPC requests containing event data, and outputs these in a JSON form, one event per line. This is a default mapping for Protobuf data determined by the Protobuf libraries, and is not identical to Cyberprobe JSON format.

The eventstream-service.C code may be useful in building your own gRPC service.

Synopsis:

eventstream-service [HOST:PORT]

Example:

eventstream-service 0.0.0.0:9100

Specifies the hostname or IP address to bind this service to, to receive requests. 0.0.0.0 means any address.

Specifies the port number to listen on.

Next: Management protocol, Previous: eventstream-service invocation, Up: Reference [Index]

cyberprobe supports packet output in one of two output formats,

which are both LI formats. LI formats were chosen as they set good, open

standards for streaming packets to a destination. There are also existing

security products such as firewalls, and analysis tools which understand

with these protocols. The two formats are ETSI LI and NHIS 1.1.

The first of the formats supported is the ETSI LI format (see ETSI TS 102 232), which is used in Europe and internationally. The protocol is described using an ASN.1 specification which can be downloaded from the ETSI web-site. Google can find the standards. The over-arching TS 102 232-1 standard describes the transport, while the TS 102 232-3 standard describes putting the IP packets in the transport.

Those adverse to the use of ASN.1 technology may prefer the second format.

NHIS 1.1 which was defined for use in the UK in the 90s, based on GLIC in ETSI TS 101 671. The protocol is a much simpler header protocol than ETSI LI, and needs less work to decode.

The standard was available on the internet on the http://gliif.org website, but that web-site has recently gone offline.

The bluffers guide to decoding goes...

cyberprobe automatically reconnects to failed destinations, but

the buffering strategy is very simple. When destinations fail, the packets

are buffered in a small queue, but there is limited buffering, so once the

queue fills, packets will start to be dropped. The locking strategy is

simple, so loss of a single endpoint will currently result in data loss to

all endpoints. This may be a problem for operational scenarios where high

data availability is required.

cyberprobe includes some code to decode the ETSI and NHIS streams,

and also includes two test utilities, etsi-rcvr and

nhis11-rcvr which listen on a specified port number, decode the

stream data, and forward in PCAP format on standard output. Example usage

would be:

etsi-rcvr 10001 | tcpdump -n -r- nhis11-rcvr 10000 | tcpdump -n -r-

Next: cybermon invocation, Previous: Output streaming protocols, Up: Reference [Index]

The management interface is a simple interface which supports studying and dynamically changing the cyberprobe configuration: endpoints, targets and interfaces.

The configuration file specifies a port number, and username and password for the interface.

The interface is intended to be used programmatically. It is a request/response interface.

Note that the protocol is in clear, so should not be used across a hostile network.

Each request is a JSON object encoded on a single line terminated with

‘NEWLINE’. Each request is required to have an action field

which describes the action to take. One action is special: auth;

other actions cannot be used until authentication has taken place.

Example request:

{"action":"auth","password":"admin","username":"horse-battery-staple"}

Each response is preceded by a base-10 encoded human-readable byte length

terminated by ‘NEWLINE’ after which the response is sent. Response

is a JSON payload. The status field contains a numeric response

code, statuses in the range 200-299 indicate success.

The message field contains a human-readable response string,

which will be an error message in the error case.

Other fields depend on the request.

Here is the list of supported actions. JSON has been pretty-printed to aid understanding.

The

following values of the action field are supported:

authMust be accompanied by valid username and password fields in

order to authenticate. The response contains status and

message fields.

Example request:

{

"action":"auth",

"password":"admin",

"username":"horse-battery-staple"

}

Example response:

{"message":"Authenticated.","status":200}

add-interfaceStarts packet capture from an interface. Request should have an interface field containing an interface specification.

Example request:

{

"action": "add-interface",

"interface": {

"delay": 0.5,

"filter": "not port 9876",

"interface": "vxlan:9876"

}

}

Example response:

{"message":"Interface added.","status":200}

remove-interfaceRemoves a previously enabled packet capture.

Example request:

{

"action": "remove-interface",

"interface": {

"delay": 0.5,

"filter": "not port 9876",

"interface": "vxlan:9876"

}

}

Example response:

{"message":"Interface removed.","status":200}

get-interfacesLists all interfaces, output is format iface:delay:filter.

Example request:

{

"action": "get-interfaces"

}

Example response:

{

"interfaces": [

{

"delay": 0.5,

"filter": "not port 10001 and not port 10002",

"interface": "vxlan:4789"

},

{

"delay": 0.3

"filter": "",

"interface": "vxlan:4790"

},

{

"delay": 0.5,

"filter": "not port 9876",

"interface": "vxlan:9876"

}

],

"message": "Interfaces list.",

"status": 201

}

add-endpointAdds an endpoint to delivery data to.

Example request:

{

"action": "add-endpoint",

"endpoint": {

"certificate": "cert.crt",

"hostname": "receiver",

"key": "key.pem",

"port": 10000,

"transport": "tls",

"trusted-ca": "ca.crt",

"type": "etsi"

}

}

Example response:

{"message":"Endpoint added.","status":200}

remove-endpointRemoves a previously enabled endpoint.

Example request:

{

"action": "remove-endpoint",

"endpoint": {

"certificate": "cert.crt",

"hostname": "receiver",

"key": "key.pem",

"port": 10000,

"transport": "tls",

"trusted-ca": "ca.crt",

"type": "etsi"

}

}

Example response:

{"message":"Endpoint removed.","status":200}

get-endpointsGets the endpoint list.

Example request:

{"action":"get-endpoints"}

Example response:

{

"endpoints": [

{

"hostname": "localhost",

"port": 9000,

"transport": "tcp",

"type": "etsi"

},

{

"hostname": "localhost",

"port": 9002,

"transport": "tcp",

"type": "nhis1.1"

},

{

"certificate": "cert.crt",

"hostname": "receiver",

"key": "key.pem",